-

Notifications

You must be signed in to change notification settings - Fork 0

/

Copy pathapp.py

849 lines (670 loc) · 34.6 KB

/

app.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

520

521

522

523

524

525

526

527

528

529

530

531

532

533

534

535

536

537

538

539

540

541

542

543

544

545

546

547

548

549

550

551

552

553

554

555

556

557

558

559

560

561

562

563

564

565

566

567

568

569

570

571

572

573

574

575

576

577

578

579

580

581

582

583

584

585

586

587

588

589

590

591

592

593

594

595

596

597

598

599

600

601

602

603

604

605

606

607

608

609

610

611

612

613

614

615

616

617

618

619

620

621

622

623

624

625

626

627

628

629

630

631

632

633

634

635

636

637

638

639

640

641

642

643

644

645

646

647

648

649

650

651

652

653

654

655

656

657

658

659

660

661

662

663

664

665

666

667

668

669

670

671

672

673

674

675

676

677

678

679

680

681

682

683

684

685

686

687

688

689

690

691

692

693

694

695

696

697

698

699

700

701

702

703

704

705

706

707

708

709

710

711

712

713

714

715

716

717

718

719

720

721

722

723

724

725

726

727

728

729

730

731

732

733

734

735

736

737

738

739

740

741

742

743

744

745

746

747

748

749

750

751

752

753

754

755

756

757

758

759

760

761

762

763

764

765

766

767

768

769

770

771

772

773

774

775

776

777

778

779

780

781

782

783

784

785

786

787

788

789

790

791

792

793

794

795

796

797

798

799

800

801

802

803

804

805

806

807

808

809

810

811

812

813

814

815

816

817

818

819

820

821

822

823

824

825

826

827

828

829

830

831

832

833

834

835

836

837

838

839

840

841

842

843

844

845

846

847

848

849

import streamlit as st

import pandas as pd

import matplotlib.pyplot as plt

import plotly.express as px

from model_module import calculate_metrics, load_models, prepare_input_for_prediction, load_dataset

from trading_strategies import run_percentile_strategy, run_BOS_strategy,strategy_description

from visualizations import display_inputs

import scipy.stats as stats

# Model names for price prediction

models_names_price = {

"Select a model": "",

"ARIMA": "price_ARIMA_model.pkl",

"GRU": "price_gru_model.h5",

"LSTM": "price_lstm_model.h5",

"Random Forest": "price_randomForest_model.pkl"

}

# Model names for direction prediction

models_names_direction = {

"Select a model": "",

"GRU": "sign_gru_model.keras",

"LSTM": "sign_LSTM_model.keras",

"Random Forest": "sign_randomForest_model.pkl",

"Linear Regression": "sign_linearRegression_model.pkl"

}

def home():

st.title("Electricity Trading Strategy Project")

st.write("""

Welcome to the Electricity Trading Strategy Project website! This platform showcases the work done in analyzing and developing trading strategies for the electricity market. Here's what you can explore:

""")

st.header("Project Overview")

st.write("""

This project aims to forecast electricity prices in the USA, specifically the PJM Interconnection, and develop trading strategies based on these forecasts and other known strategies. We employ advanced machine learning models such as SARIMA and GRU to predict price movements and create a robust trading strategy. The models will be evaluated based on their accuracy in predicting the direction of the next day's price and the accuracy of the predicted prices.

""")

st.header("Key Features")

st.write("""

- **Model Overview**: Detailed explanations of the models used for price predictions.

- **Data Exploration**: Interactive visualizations of historical electricity prices and other relevant data, such as natural gas prices, and how these variables impact electricity prices.

- **Predictions**: Live predictions based on the latest data, allowing users to see next-day price forecasts based on the chosen model.

- **Trading Strategy**: Comprehensive description of the trading logic and strategy implementation.

- **Performance Metrics**: Evaluation of the trading strategy’s performance through various metrics such as Sharpe ratio, win rate, and ROI.

- **Backtesting**: Tools to backtest the trading strategy on historical data to assess its viability.

- **Risk Management**: Discussion on risk management techniques and tools to adjust strategy parameters.

""")

st.header("Model Evaluation")

st.write("""

We evaluate our models using two main criteria:

- **Direction Accuracy**: The accuracy of predicting the direction of the next day's price movement.

- **Price Accuracy**: The accuracy of the actual predicted prices compared to the real prices.

""")

def data_exploration():

from sklearn.preprocessing import MinMaxScaler

import seaborn as sns

data_1 = pd.read_csv('datasets/Net_generation_United_States_all_sectors_monthly.csv')

st.title("Data Exploration")

st.write("### Net electricity generation by source")

st.write(data_1)

try:

data_1['Month'] = pd.to_datetime(data_1['Month'], format='%b-%y', errors='coerce')

except ValueError:

st.error("Error parsing dates in the first dataset. Please check the date format in the CSV file.")

st.stop()

data_1 = data_1.dropna(subset=['Month'])

sources_1 = list(data_1.columns[1:]) # Assuming the first column is the date or time

selected_sources_1 = st.multiselect("Select sources to plot from the first dataset", options=sources_1, default=sources_1)

plot_data_1 = data_1[['Month'] + selected_sources_1].dropna()

fig_1, ax_1 = plt.subplots()

for source in selected_sources_1:

ax_1.plot(plot_data_1['Month'], plot_data_1[source], label=source)

ax_1.set_title("Net Electricity Generation by Source")

ax_1.set_xlabel("Month")

ax_1.set_ylabel("Net Generation (thousand megawatthours)")

ax_1.legend()

plt.xticks(rotation=45)

st.pyplot(fig_1)

# Second dataset: Net generation by places

data_2 = pd.read_csv(r"datasets/Net_generation_by places.csv")

# Display the raw data

st.write("### Net electricity generation by places")

st.write(data_2)

# Convert 'Month' column to datetime format

try:

data_2['Month'] = pd.to_datetime(data_2['Month'], format='%y-%b', errors='coerce')

except ValueError:

st.error("Error parsing dates in the second dataset. Please check the date format in the CSV file.")

st.stop()

# Drop rows with invalid dates

data_2 = data_2.dropna(subset=['Month'])

# Select multiple regions to plot

regions = list(data_2.columns[1:]) # Assuming the first column is the date or time

selected_regions = st.multiselect("Select regions to plot from the second dataset", options=regions, default=regions)

# Filter data for the selected regions

plot_data_2 = data_2[['Month'] + selected_regions].dropna()

# Plot the data

fig_2, ax_2 = plt.subplots()

for region in selected_regions:

ax_2.plot(plot_data_2['Month'], plot_data_2[region], label=region)

ax_2.set_title("Net Electricity Generation by Places")

ax_2.set_xlabel("Month")

ax_2.set_ylabel("Net Generation (thousand megawatthours)")

ax_2.legend()

plt.xticks(rotation=45)

st.pyplot(fig_2)

data_3 = pd.read_csv(r"datasets/Retail_sales_of_electricity_United_States_monthly.csv")

# Display the raw data

st.write("### Retail sales of electricity")

st.write(data_3)

# Convert 'Month' column to datetime format

try:

data_3['Month'] = pd.to_datetime(data_3['Month'], format='%b-%y', errors='coerce')

except ValueError:

st.error("Error parsing dates in the third dataset. Please check the date format in the CSV file.")

st.stop()

# Drop rows with invalid dates

data_3 = data_3.dropna(subset=['Month'])

# Select multiple regions to plot

types = list(data_3.columns[1:]) # Assuming the first column is the date or time

selected_types = st.multiselect("Select types to plot from the third dataset", options=types, default=types)

# Filter data for the selected regions

plot_data_3 = data_3[['Month'] + selected_types].dropna()

# Plot the data

fig_3, ax_3 = plt.subplots()

for type in selected_types:

ax_3.plot(plot_data_3['Month'], plot_data_3[type], label=type)

ax_3.set_title("Retail Sales of Electricity")

ax_3.set_xlabel("Month")

ax_3.set_ylabel("Sales (thousand megawatthours)")

ax_3.legend()

plt.xticks(rotation=45)

st.pyplot(fig_3)

st.subheader('Correlations between variables:')

st.image(r'assets/Correlations between variables1.png')

st.image(r'assets/Correlations between variables2.png')

st.subheader("Relation between Electricity price and Temperature")

st.image(r"assets/Relation between Electricity price and Temperature.png")

st.subheader("Net_generated electricity in United States")

st.image(r"assets/Net_generated electricity and Temperature.png")

st.subheader("Average Electricity price by Month")

st.image(r"assets/Average Electricity price by Month.png")

st.subheader("Electricity seasonal decomposition")

st.image(r"assets/Electricity seasonal decomposition.png")

st.subheader("Natural Gas seasonal decomposition")

st.image(r"assets/Natural Gas seasonal decomposition.png")

st.title("Electricity and Natural Gas Data")

st.write("Here is a preview of the dataset:")

AllInOne_Data = load_dataset()

st.dataframe(AllInOne_Data.head())

# Date range slider

st.write("Select the date range to display:")

min_date = AllInOne_Data['Trade Date'].min().to_pydatetime()

max_date = AllInOne_Data['Trade Date'].max().to_pydatetime()

date_range = st.slider("Date", min_date, max_date, (min_date, max_date))

# Filter data based on selected date range

filtered_data = AllInOne_Data[(AllInOne_Data['Trade Date'] >= date_range[0]) & (AllInOne_Data['Trade Date'] <= date_range[1])]

# Interactive graph for Electricity

st.write("Electricity Prices Over Time:")

fig_electricity = px.line(filtered_data, x='Trade Date', y='Electricity: Wtd Avg Price $/MWh', title='Electricity Prices Over Time')

st.plotly_chart(fig_electricity)

# Interactive graph for Natural Gas

st.write("Natural Gas Prices Over Time:")

fig_natural_gas = px.line(filtered_data, x='Trade Date', y='Natural Gas: Henry Hub Natural Gas Spot Price (Dollars per Million Btu)', title='Natural Gas Prices Over Time')

st.plotly_chart(fig_natural_gas)

st.write("### All in One Graph")

# Drop rows with NaN values in the specified columns

AllInOne_Data = AllInOne_Data.dropna(subset=['Trade Date', 'Electricity: Delivery Start Date', 'Electricity: Delivery End Date'])

# Get the list of types (assuming the first three columns are dates or time related)

types = list(AllInOne_Data.columns[3:])

# Multiselect widget for selecting types to plot

selected_types = st.multiselect("Select variables to plot", options=types, default=[])

# Prepare the data for plotting

if selected_types:

plot_AllInOne_Data = AllInOne_Data[['Trade Date'] + selected_types].dropna()

# Normalize the selected columns

scaler = MinMaxScaler()

plot_AllInOne_Data[selected_types] = scaler.fit_transform(plot_AllInOne_Data[selected_types])

# Plot the data

fig_4, ax_4 = plt.subplots()

for type in selected_types:

ax_4.plot(plot_AllInOne_Data['Trade Date'], plot_AllInOne_Data[type], label=type)

# Enhancements

ax_4.set_title("Normalized Electricity Data Over Time")

ax_4.set_xlabel("Trade Date")

ax_4.set_ylabel("Normalized Value")

ax_4.legend()

plt.xticks(rotation=45)

# Display the plot

st.pyplot(fig_4)

else:

st.write("Please select at least one type to plot.")

# New graph for moving average

st.write("### Moving Average Graph")

# User selects variable and moving average window

selected_variable = st.selectbox("Select variable for moving average", options=types)

moving_average_window = st.slider("Select moving average window (weeks)", min_value=20, max_value=100, value=20)

# Prepare the data for the moving average plot

if selected_variable:

AllInOne_Data['Trade Date'] = pd.to_datetime(AllInOne_Data['Trade Date'])

AllInOne_Data.set_index('Trade Date', inplace=True)

plot_data_ma = AllInOne_Data[[selected_variable]].dropna()

# Broadcast the weekly mean to all values in each week

filled_df = plot_data_ma.copy()

filled_df['Week'] = filled_df.index.strftime('%U') # Add a new column to store the week number

filled_df[selected_variable] = filled_df.groupby('Week')[selected_variable].transform(lambda x: x.fillna(x.mean()))

# Calculate the rolling mean with the selected window

filled_df[f'rolling_mean_{moving_average_window}'] = filled_df[selected_variable].rolling(window=moving_average_window).mean()

# Plot the data

fig_ma, ax_ma = plt.subplots()

ax_ma.plot(filled_df.index, filled_df[selected_variable], label=selected_variable)

ax_ma.plot(filled_df.index, filled_df[f'rolling_mean_{moving_average_window}'], label=f'Rolling Mean ({moving_average_window} weeks)', linestyle='--')

# Enhancements

ax_ma.set_title(f"{selected_variable} with {moving_average_window}-Week Rolling Mean")

ax_ma.set_xlabel("Trade Date")

ax_ma.set_ylabel(selected_variable)

ax_ma.legend()

plt.xticks(rotation=45)

# Display the plot

st.pyplot(fig_ma)

else:

st.write("Please select a variable to plot.")

st.write("### Non-Linear Relationships Between Variables (using Scatter plot)")

# User selects two variables to plot

variable_x = st.selectbox("Select variable for x-axis", options=types)

variable_y = st.selectbox("Select variable for y-axis", options=types)

# Prepare the data for the scatter plot

if variable_x and variable_y:

fig_non_linear, ax_non_linear = plt.subplots()

sns.regplot(x=variable_x, y=variable_y, data=AllInOne_Data, ax=ax_non_linear, scatter_kws={'s':10}, line_kws={"color":"red"})

# Enhancements

ax_non_linear.set_title(f"Scatter plot with regression line for {variable_x} and {variable_y}")

ax_non_linear.set_xlabel(variable_x)

ax_non_linear.set_ylabel(variable_y)

plt.xticks(rotation=45)

# Display the plot

st.pyplot(fig_non_linear)

else:

st.write("Please select variables to plot.")

st.write("### Distributions")

dist_x = st.selectbox("Select variable to plot its distribution", options=types)

skewness = AllInOne_Data[dist_x].skew()

st.write(f"The Skewness for this variable is: {skewness}")

kurtosis = AllInOne_Data[dist_x].kurtosis()

st.write(f"The Kurtosis for this variable is: {kurtosis}")

fig, axes = plt.subplots(1, 2, figsize=(12, 5))

# Histogram with KDE

sns.histplot(AllInOne_Data[dist_x], kde=True, ax=axes[0])

axes[0].set_title(f'Distribution of {dist_x}')

# Q-Q plot

stats.probplot(AllInOne_Data[dist_x], dist="norm", plot=axes[1])

axes[1].set_title(f'Q-Q plot of {dist_x}')

st.pyplot(fig)

def models_overview():

st.title("Models Overview")

st.write("""

In this project, various models are employed to predict electricity prices and develop trading strategies. The models are categorized into those used for predicting the sign (direction) of price changes and those used for predicting the actual price. Below is a detailed description of each model used:

""")

# Predicting the Sign

st.header("Predicting the Sign")

st.subheader("Using Deep Learning:")

st.write("""

- **GRU Sign Detection**:

A Gated Recurrent Unit (GRU) model used to predict whether the next day's price will go up or down.

**Components**:

- Update gate

- Reset gate

- Current memory content

- Final memory at current time step

**Special Features**:

- Simplified architecture compared to LSTM

- Faster training due to fewer parameters

**Use Case in Predicting the Sign**:

- To capture the sequential dependencies in electricity price changes and predict the direction.

""")

st.write("""

- **LSTM Sign Detection**:

A Long Short-Term Memory (LSTM) model used for the same purpose as the GRU model.

**Components**:

- Forget gate

- Input gate

- Output gate

- Cell state

**Special Features**:

- Ability to capture long-term dependencies

- Effective in handling vanishing gradient problems

**Use Case in Predicting the Sign**:

- To utilize its memory capabilities for more accurate prediction of price direction over longer periods.

""")

st.subheader("Using Regression Models:")

st.write("""

- **Linear Regression**:

A basic regression model to predict the direction of the price change.

**Components**:

- Dependent variable

- Independent variables

- Coefficients

- Intercept

**Special Features**:

- Simple implementation

- Provides a baseline for comparison

**Use Case in Predicting the Sign**:

- To offer a straightforward approach to predicting price direction based on linear relationships.

- Used the direction of lagged prices as a variable to predict the direction of the next day's price.

""")

st.image(r"assets/LinearRegression.png")

st.write("""

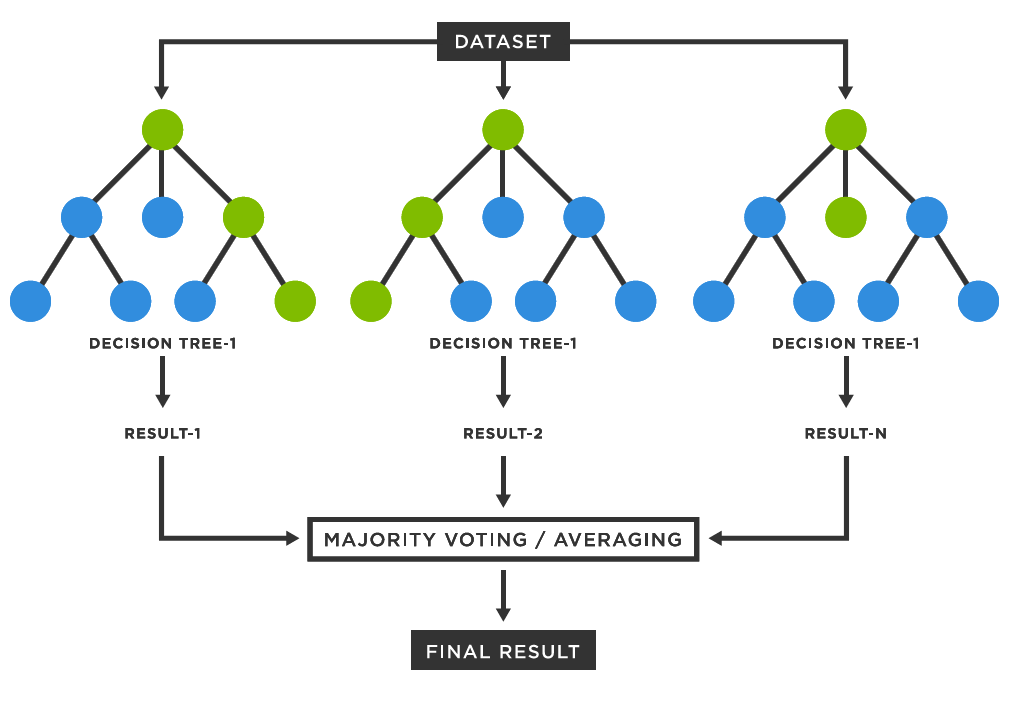

- **Random Forest**:

An ensemble learning method using multiple decision trees to improve prediction accuracy.

**Components**:

- Multiple decision trees

- Bagging

- Majority voting

**Special Features**:

- Reduces overfitting by averaging multiple decision trees

- Handles large datasets with higher dimensionality

**Use Case in Predicting the Sign**:

- To enhance the accuracy of direction prediction by leveraging ensemble methods.

- Used the direction of lagged prices as a variable to predict the direction of the next day's price.

""")

# Predicting the Price

st.header("Predicting the Price")

st.subheader("Naive Forecast:")

st.write("""

A simple model that uses the previous day's price as the forecast for the next day.

**Components**:

- Previous day's price

**Special Features**:

- Minimal computation

- Serves as a baseline for more complex models

**Use Case in This Project**:

- To provide a simple benchmark for evaluating the performance of other models.

""")

st.subheader("Random Forest:")

st.write("""

An ensemble learning method using multiple decision trees to predict the actual price.

**Components**:

- Multiple decision trees

- Bagging

- Aggregation of results

**Special Features**:

- Captures complex interactions between features

- Provides robust and accurate predictions

**Use Case in This Project**:

- To predict actual electricity prices by capturing nonlinear relationships in the data.

- Using features/variables such as natural gas prices and temperature to predict electricity prices.

""")

st.subheader("Machine Learning Models:")

st.write("""

- **ARIMA**:

An Autoregressive Integrated Moving Average model used for time series forecasting.

**Components**:

- **Autoregressive (AR) terms**: These represent past values of the forecast variable in a regression equation. AR terms model the dependency of the variable on its own past values, where "AR(p)" indicates "p" past values are used.

- **Integrated (I) terms**: This reflects the differencing of raw observations in time series data to achieve stationarity. The "I(d)" term denotes the number of times differencing is applied to the series for achieving stationarity.

- **Moving Average (MA) terms**: These involve the dependency between an observation and a residual error from a moving average model applied to lagged observations. MA terms in an "MA(q)" model use "q" past errors to forecast future values.

**ACF and PACF in Time Series Modeling**:

- **Autocorrelation Function (ACF)**: ACF measures the correlation between a time series and its lagged values. For AR models, ACF helps determine the order "p" by showing significant correlations at lags up to "p". For MA models, ACF drops off after lag "q", indicating the order of the moving average.

- **Partial Autocorrelation Function (PACF)**: PACF measures the correlation between a time series and its lagged values while adjusting for the effects of intervening lags. PACF is useful in determining the order of AR models, as it shows direct effects of past lags on the current value, without the indirect effects of shorter lags.

**Special Features**:

- Effective for univariate time series

- Captures various types of temporal patterns

**Use Case in This Project**:

- To model and forecast the electricity price time series based on past values and past forecast errors.

""")

st.image("assets/PACF_ACF.png",caption=" ACF and PACF plots of Electricity Price for ARIMA")

st.write("""

- **SARIMA**:

Seasonal ARIMA model to capture seasonality in the data.

**Components**:

- Seasonal autoregressive (SAR) terms

- Seasonal integrated (SI) terms

- Seasonal moving average (SMA) terms

**Special Features**:

- Incorporates seasonality into ARIMA

- Models complex seasonal patterns

**Use Case in This Project**:

- To predict electricity prices by capturing both seasonal and non-seasonal patterns in the data.

""")

st.write("""

- **Auto ARIMA (SARIMA)**:

Automated selection of the best SARIMA model parameters.

**Components**:

- Automatic parameter tuning

**Special Features**:

- Simplifies model selection process

- Enhances model accuracy by choosing optimal parameters

**Use Case in This Project**:

- To automate the process of finding the best SARIMA model for electricity price forecasting.

""")

st.write("""

- **GARCH**:

Generalized Autoregressive Conditional Heteroskedasticity model to forecast volatility.

**Components**:

- Autoregressive terms for variance

- Moving average terms for variance

**Special Features**:

- Models volatility clustering

- Useful for financial time series

**Use Case in This Project**:

- To forecast the volatility of electricity prices, which can inform risk management strategies.

:max_bytes(150000):strip_icc():format(webp)/GARCH-9d737ade97834e6a92ebeae3b5543f22.png)

""")

st.subheader("Deep Learning Models:")

st.write("""

- **GRU**:

A Gated Recurrent Unit model for predicting the actual price.

**Use Case in predicting the Price**:

- To predict electricity prices by capturing temporal dependencies in the data.

""")

st.write("""

- **LSTM**:

A Long Short-Term Memory model for price prediction.

**Use Case in predicting the Price**:

- To predict electricity prices by leveraging its long-term memory capabilities.

""")

st.write("""

- **LSTM using Normalized Prices**:

LSTM model applied to normalized price data.

**Components**:

- Normalized input data

**Special Features**:

- Improved training efficiency

- Enhanced model performance

**Use Case in This Project**:

- To achieve better model performance by normalizing the input price data.

""")

st.write("""

- **LSTM Regression**:

LSTM model used in a regression setting for price prediction.

**Components**:

- Regression output layer

**Special Features**:

- Directly predicts continuous price values

- Suitable for precise price forecasting

**Use Case in This Project**:

- To provide accurate price predictions by directly modeling the continuous price values.

""")

st.subheader("Model Creation, Training, and Prediction")

st.write("""

Here's a basic example of how a model is created, trained, and used for predictions:

""")

code = '''

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error

# Sample data

data = pd.read_csv('electricity_prices.csv')

X = data[['feature1', 'feature2', 'feature3']]

y = data['price']

# Split the data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.5, random_state=42)

# Create the model

model = RandomForestRegressor(n_estimators=100, random_state=42)

# Train the model

model.fit(X_train, y_train)

# Make predictions

y_pred = model.predict(X_test)

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

print(f'Mean Squared Error: {mse}')

'''

st.code(code, language='python')

def model_selection():

AllInOne_Data = load_dataset()

st.title("Model Selection for direction Prediction")

st.write("Choose and compare different models for direction prediction.")

direction_models = list(models_names_direction.keys())

selected_direction_model = st.selectbox("Select a model", direction_models)

# Show key metrics for the selected model

if selected_direction_model and selected_direction_model != "Select a model":

model_name = models_names_direction[selected_direction_model]

strategy_description(model_name)

metrics, descriptions = calculate_metrics(model_name, AllInOne_Data)

st.write(f"Metrics for {selected_direction_model}:")

st.write(pd.DataFrame({'Metric': descriptions.keys(), 'Description': descriptions.values()}).set_index('Metric'))

st.write(metrics)

st.title("Model Selection for price Prediction")

st.write("Choose and compare different models for price prediction.")

price_models = list(models_names_price.keys())

selected_price_model = st.selectbox("Select a model", price_models)

# Show key metrics for the selected model

if selected_price_model and selected_price_model != "Select a model":

model_name = models_names_price[selected_price_model]

strategy_description(model_name)

metrics,descriptions = calculate_metrics(model_name, AllInOne_Data)

st.write(f"Metrics for {selected_price_model}:")

st.write(pd.DataFrame({'Metric': descriptions.keys(), 'Description': descriptions.values()}).set_index('Metric'))

st.write(metrics)

def predictions():

import pmdarima as pm

st.title("Electricity Prediction")

# Step 1: Select prediction type

prediction_type = st.selectbox("Select Prediction Type", ["", "Price", "Direction"])

if prediction_type:

if prediction_type == "Price":

models_names = {

"GRU": "price_gru_model.h5",

"LSTM": "price_lstm_model.h5",

"Random Forest": "price_randomForest_model.pkl",

"ARIMA": "price_ARIMA_model.pkl"

}

elif prediction_type == "Direction":

models_names = {

"GRU": "sign_gru_model.keras",

"LSTM": "sign_LSTM_model.keras",

"Random Forest": "sign_randomForest_model.pkl",

"Linear Regression": "sign_linearRegression_model.pkl"

}

# Step 2: Select model based on prediction type

model_name = st.selectbox("Select a Model", list(models_names.keys()))

if model_name and model_name != "Select a model":

model_file = models_names[model_name]

model = load_models(model_file)

# Step 3: Display inputs based on the model's requirements

inputs = display_inputs(model_file)

if inputs is not None:

# Add a submit button

if st.button("Submit"):

if model_name == "ARIMA":

inputs, scaler = prepare_input_for_prediction(inputs, model_file)

# Automatically find the best ARIMA parameters

model = pm.auto_arima(inputs, seasonal=True, trace=True, error_action='ignore', suppress_warnings=True)

# Fit the model on the entire dataset

model.fit(inputs)

# Make the prediction with confidence intervals

forecast, conf_int = model.predict(n_periods=1, return_conf_int=True)

prediction = forecast[0]

lower_bound, upper_bound = conf_int[0]

st.write("The predicted price for the next day is: ${:.5f}".format(prediction))

st.write("95% confidence interval: ${:.5f} and ${:.5f}".format(lower_bound, upper_bound))

elif model_file == "price_gru_model.h5" or model_file == "price_lstm_model.h5":

inputs, scaler = prepare_input_for_prediction(inputs, model_file)

predicted_price_scaled = model.predict(inputs)

prediction = scaler.inverse_transform(predicted_price_scaled)

predicted_value = prediction[0].item()

st.write(f"The predicted price for the next day is: ${predicted_value:.5f}")

#st.write(f"95% confidence interval: ${lower_bound:.5f} - ${upper_bound:.5f}")

elif model_file == "price_randomForest_model.pkl":

inputs, scaler = prepare_input_for_prediction(inputs, model_file)

predicted_return= model.predict(inputs)

#prediction = scaler.inverse_transform(predicted_return)

predicted_value = predicted_return[0].item() * 100

st.write(f"The predicted return of electricity price is: {predicted_value:.5f}%")

else:

prediction = model.predict(inputs)[0]

if prediction_type == "Price":

if model_name == "ARIMA":

st.write("The predicted price for the next day is: ${:.5f}".format(prediction))

else:

predicted_value = prediction[0].item()

st.write(f"The predicted price for the next day is: ${predicted_value:.5f}")

elif prediction_type == "Direction":

direction = "up" if prediction > 0 else "down"

st.write(f"The predicted direction for the next day is: {direction}")

else:

st.write("Please enter all required data for the selected model.")

def backtesting():

AllInOne_Data = load_dataset()

st.title("Backtesting")

st.subheader("Interactive backtesting tool.")

starting_amount = st.number_input("Starting Amount", value=1000, min_value=1000)

strategies = ["Percentile Channel Breakout (Mean Reversion)", "Break of Structure"]

selected_strategy = st.selectbox("Select a strategy for backtesting", strategies)

strategy_description(selected_strategy)

if selected_strategy == "Percentile Channel Breakout (Mean Reversion)":

backtest_results = run_percentile_strategy(starting_amount, AllInOne_Data)

elif selected_strategy == "Break of Structure":

st.image(r"assets/BOS.png")

backtest_results = run_BOS_strategy(starting_amount, AllInOne_Data)

if backtest_results is not None:

st.write("Backtest completed.")

st.session_state['backtest_results'] = backtest_results

st.session_state['strategy_name'] = selected_strategy

export_results()

def export_results():

import io

if 'backtest_results' in st.session_state and 'strategy_name' in st.session_state:

backtest_results = st.session_state['backtest_results']

strategy_name = st.session_state['strategy_name']

st.write(backtest_results)

st.title("Export Results")

st.write("Provide options to export backtesting results.")

export_format = st.radio("Choose export format", ("CSV", "Excel"))

if st.button("Export"):

if export_format == "CSV":

csv_data = backtest_results.to_csv(index=False).encode('utf-8')

st.download_button(

label="Download CSV",

data=csv_data,

file_name=f'{strategy_name}_backtest_results.csv',

mime='text/csv'

)

st.write("Results ready to download as CSV.")

elif export_format == "Excel":

# Create a BytesIO buffer

buffer = io.BytesIO()

# Write DataFrame to the buffer

with pd.ExcelWriter(buffer, engine='xlsxwriter') as writer:

backtest_results.to_excel(writer, index=False, sheet_name='Sheet1')

# Retrieve the buffer value

excel_data = buffer.getvalue()

st.download_button(

label="Download Excel",

data=excel_data,

file_name=f'{strategy_name}_backtest_results.xlsx',

mime='application/vnd.openxmlformats-officedocument.spreadsheetml.sheet'

)

st.write("Results ready to download as Excel.")

else:

st.title("No results available for export. Please run a backtest first.")

def contact():

import smtplib

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

from dotenv import find_dotenv, load_dotenv

import os

import openpyxl

st.title("Contact Us")

dotenv_path = find_dotenv()

load_dotenv(dotenv_path)

def send_email(name, email, message):

from_email = os.getenv('EMAIL')

from_password = os.getenv('EMAIL_PASSWORD')

to_email = os.getenv('EMAIL')

msg = MIMEMultipart()

msg['From'] = from_email

msg['To'] = to_email

msg['Subject'] = "New Contact Form Submission"

body = f"Name: {name}\nEmail: {email}\nMessage: {message}"

msg.attach(MIMEText(body, 'plain'))

# Send the email

try:

server = smtplib.SMTP('smtp.gmail.com', 587)

server.starttls()

server.login(from_email, from_password)

text = msg.as_string()

server.sendmail(from_email, to_email, text)

server.quit()

return True

except Exception as e:

st.error(f"An error occurred: {e}")

return False

st.write("Please fill out the form below to provide your feedback.")

with st.form(key='contact_form'):

name = st.text_input(label="Name")

email = st.text_input(label="Email")

message = st.text_area(label="Message")

submit_button = st.form_submit_button(label='Submit')

if submit_button:

if send_email(name, email, message):

st.success("Thank you for your feedback! Your message has been sent.")

else:

st.error("Failed to send your message. Please try again later.")

PAGES = {

"Home": home,

"Data Exploration": data_exploration,

"Models Overview": models_overview,

"Model Selection": model_selection,

"Predictions": predictions,

"Backtesting": backtesting,

"Export Results": export_results,

'Contact Us': contact

}

st.sidebar.title("Navigation")

selection = st.sidebar.radio("Go to", list(PAGES.keys()))

page = PAGES[selection]

page()