|

| 1 | +# Example agent prompts |

| 2 | + |

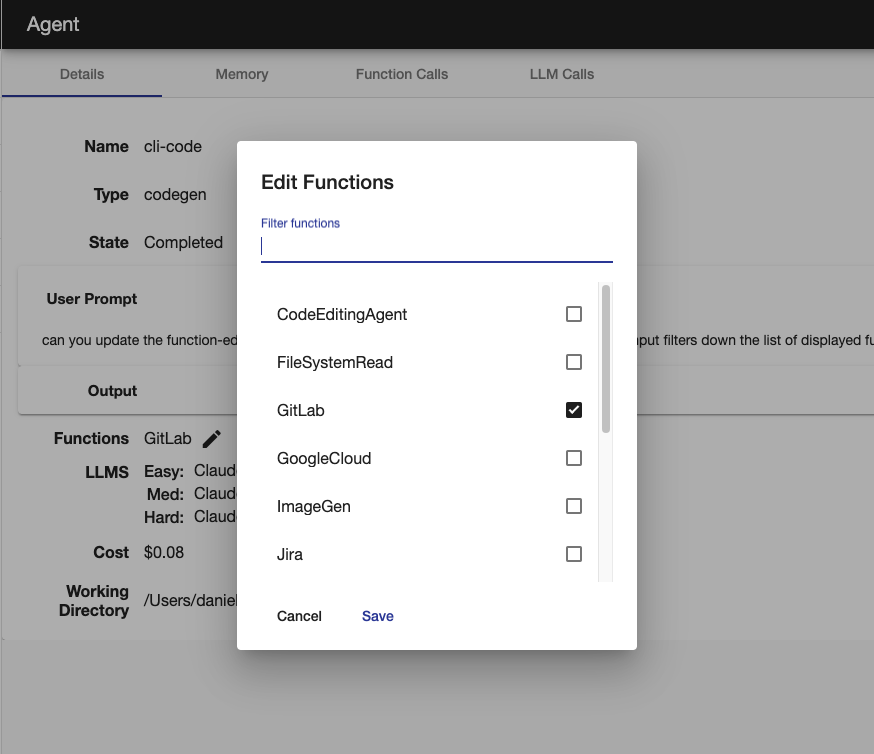

| 3 | +## Creating the Edit Functions UI component |

| 4 | + |

| 5 | +The following are the prompts used to create the function-edit-modal component to update an agents available functions |

| 6 | + |

| 7 | +> In the agent component on the details tab I want to be able to edit the functions available to the agent, similarly to how I can select them in the run agent component, by clicking on an icon button at the start of the functions list to enable the edit mode. Create a New update functions route which the agent component will call. Only use the CodeEditingAgent_runCodeEditWorkflow function to make changes to files. Think it through and you can make the edits in multiple steps |

| 8 | +<!-- --> |

| 9 | +> in the agent component in the details tab, can you move the functions edit icon button to the right of the functions list, and have the function editing open in a popup modal |

| 10 | +<!-- --> |

| 11 | +> can you update the function-edit-modal component to have the full list of functions to select from, like in the runAgent component |

| 12 | +<!-- --> |

| 13 | +> can you update the function-edit-modal component to have the list sorted alphabetically, with the selected functions first. Also remove the duplicated checkboxes on each row |

| 14 | +<!-- --> |

| 15 | +> can you update the function-edit-modal component to have a search field that filters the visible functions by a fuzzy match with what entered in the search field, and of course showing all functions if the field is empty |

| 16 | +<!-- --> |

| 17 | +> can you update the function-edit-modal component to remain the same height when the filter input filters down the list of displayed functions to select |

| 18 | +

|

| 19 | + |

| 20 | + |

| 21 | +## Adding LLM integration tests tests |

| 22 | + |

| 23 | +Given the initial integration test, this prompt created the tests for the other LLM services |

| 24 | + |

| 25 | +> for all the llm services under src/llm/models add a test to llm.int.ts the same as the anthropic example using the cheapest model available for that service |

| 26 | +

|

| 27 | +`n code 'for all the llm services...'` |

| 28 | + |

| 29 | +```typescript |

| 30 | +import { expect } from 'chai'; |

| 31 | +import { Claude3_Haiku } from "#llm/models/anthropic"; |

| 32 | + |

| 33 | + |

| 34 | +describe('LLMs', () => { |

| 35 | + |

| 36 | + const SKY_PROMPT = 'What colour is the day sky? Answer in one word.' |

| 37 | + |

| 38 | + describe('Anthropic', () => { |

| 39 | + const llm = Claude3_Haiku(); |

| 40 | + |

| 41 | + it('should generateText', async () => { |

| 42 | + const response = await llm.generateText(SKY_PROMPT, null, {temperature: 0}); |

| 43 | + expect(response.toLowerCase()).to.include('blue'); |

| 44 | + }); |

| 45 | + }) |

| 46 | +}) |

| 47 | +``` |

| 48 | + |

| 49 | + |

| 50 | + |

| 51 | + |

0 commit comments