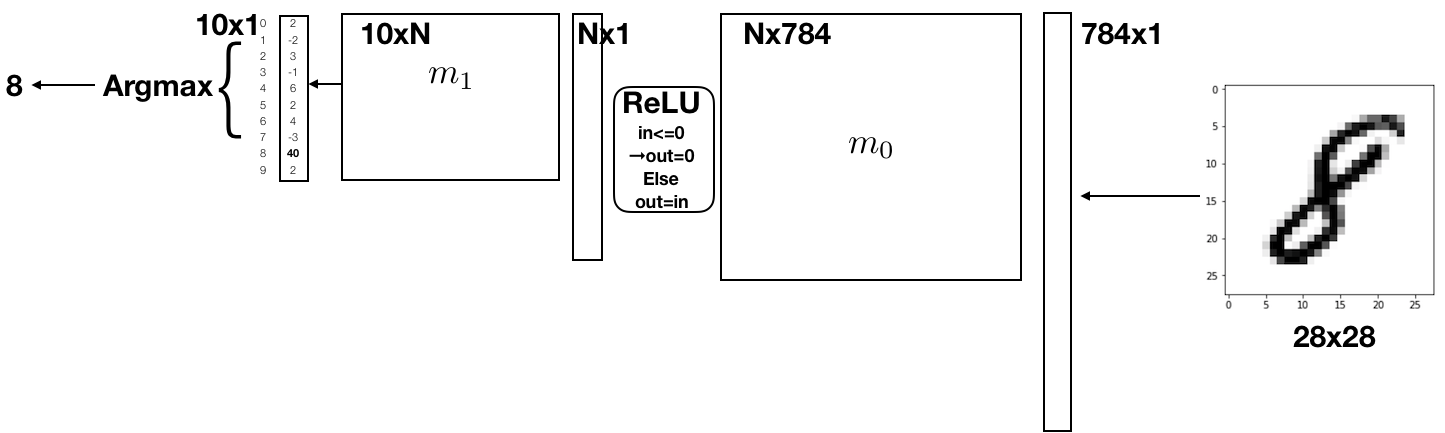

In this project, we implement a network in RISC-V assembly language which is able to classify handwritten digits. As inputs, we use the MNIST data set, which is a dataset of 60,000 28x28 images containing handwritten digits ranging from 0-9. We treat these images as “flattened” input vectors sized 784x1. In a similar way to the example before, we perform matrix multiplications with pre-trained weight matrices m_0 and m_1. Instead of thresholding we use two different non-linearities: The ReLU and ArgMax functions.

First, draw your own handwritten digits to pass to the neural net. First, open up any basic drawing program like Microsoft Paint. Next, resize the image to 28x28 pixels, draw your digit, and save it as a .bmp file in the directory /inputs/mnist/student_inputs/.

Inside that directory, use bmp_to_bin.py to turn this .bmp file into a .bin file for the neural net, as well as an example.bmp file. To convert it, run the following from inside the /inputs/mnist/student_inputs directory:

python bmp_to_bin.py example

This will read in the example.bmp file, and create an example.bin file. We can then input it into our neural net, alongside the provided m0 and m1 matrices.

java -jar venus.jar main.s -ms 10000000 -it inputs/mnist/bin/m0.bin inputs/mnist/bin/m1.bin inputs/mnist/student_inputs/example.bin output.bin

You can convert and run your own .bmp files in the same way. This should write a binary matrix file output.bin which contains your scores for each digit, and print out the digit with the highest score.